Artificial intelligence is transforming how we analyse and understand visual content, but not all “visual recognition” technologies are created equal. Today, two key methods, AI-driven content classification and Videntifier's visual fingerprint matching might be seen as competitors. However, rather than competing, they fit together, allowing improved efficiency to one when both are used standalone.

Below is a clear breakdown of why these technologies complement each other, how they differ, and where their strengths overlap.

Visual Fingerprints vs. AI Classifiers: Two Very Different Tools

Videntifier: Identifying the Exact Content

Videntifier’s core technology revolves around visual fingerprints—unique, robust visual markers extracted from images and videos. These fingerprints (also known as perceptual hashes) make it possible to determine:

- whether two pieces of content match,

- whether content is identical or modified,

- whether a portion of a video appears inside another,

- etc.

This process focuses on recognition rather than interpretation. It answers: “Have I seen this exact content before?”

This gives Videntifier unmatched precision, even if the content has been cropped, resized, recolored, or otherwise altered.

AI Classification: Understanding What Content Is

AI-based content moderation services work very differently. Instead of matching content, they interpret it. Trained on large datasets, AI learns to identify patterns and classify imagery into conceptual categories, for instance:

- nudity

- violence

- weapons

- graphic scenes

- specific objects or actions

But AI does not know whether it has seen the exact image before. Instead, it answers: “What type of content is this?”

This makes AI excellent for generalisation. Even if the AI has not seen a specific image before, it can still tell if there is adult content or harmful material in it. But those predictions are probabilistic, not guaranteed.

Where Their Strengths Overlap: Content Moderation

Content moderation is a perfect example where both technologies shine, but in different ways.

Videntifier’s Role: Fast, Accurate, and Cheap

With Videntifier:

- A database of fingerprints for known harmful or illegal content is created.

- New content is scanned and checked against the database.

- If a match is found, the system immediately returns what that content is and why it’s unwanted (even if it has been heavily altered).

- If there is no match, the system simply reports: unknown.

This process is highly accurate, extremely fast, and cost-efficient.

AI’s Role: Recognising New or Unseen Threats

AI classification steps in when content is not already known.

The AI:

- analyses the material,

- recognises patterns indicating harmful content,

- and provides a classification, even for content it has never encountered before.

But because AI is probabilistic and depends on training data, its answers aren’t always certain—and retraining may become necessary as new content types emerge.

Efficiency Matters: Fingerprints Are Cheaper Than AI

A key difference lies in computational cost: checking a fingerprint database is dramatically cheaper than performing content moderation using AI.

For example, a large platform may spend only a few cents per million fingerprint checks, but performing AI-based moderation on the same volume could cost several dollars or more due to GPU inference and model maintenance.

In addition, fingerprint databases update instantly, making new harmful recognisable right away, whereas AI model updates require retraining, fine-tuning, or re-engineering, which consumes time and resources.

This makes Videntifier ideal as the first line of defense in any moderation pipeline to ensure platform safety.

The Best Workflow: Fingerprints First, AI Second

For reliable, scalable content moderation, order matters:

- Videntifier first — to eliminate known unwanted content with certainty and minimal cost.

- Content moderation AI second — to examine unfamiliar content and identify new forms of harmful material.

This two-step process gives platforms:

- higher accuracy,

- lower false positives,

- reduced costs,

- stronger protection against both familiar and emerging threats.

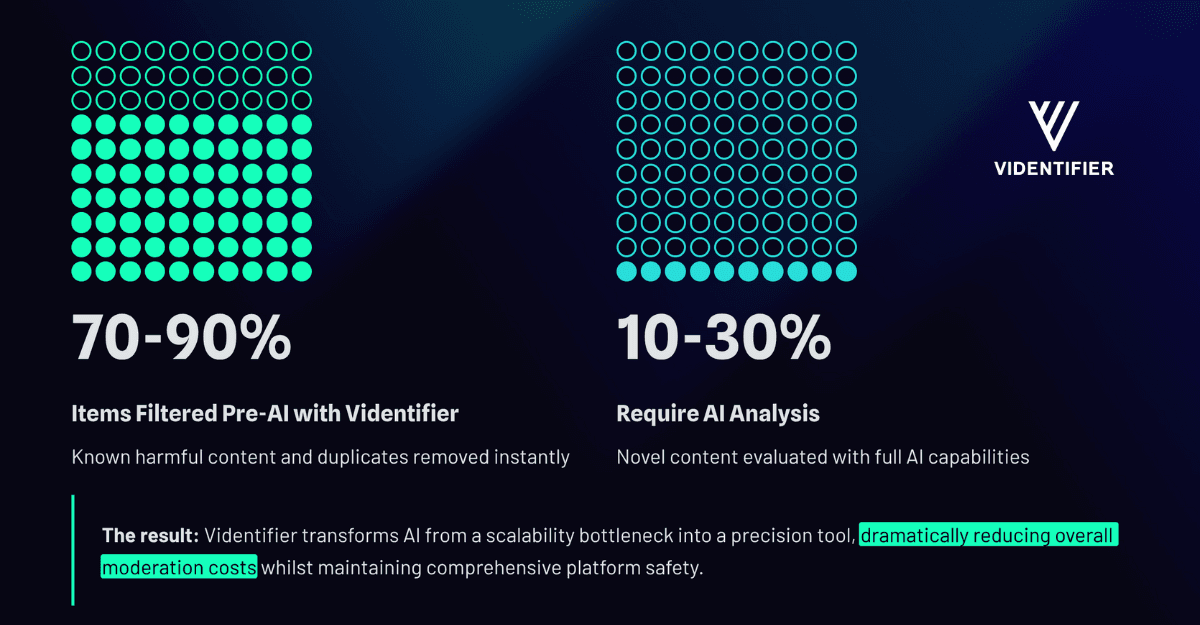

Reduced Costs Example When Using Combined Solutions

Imagine a platform that processes 100 million items per day.

Without Videntifier, all 100M items would be sent to AI, which is costly. But with Videntifier as the first step, 70–90% of known harmful/duplicate items can be filtered out immediately. Only the remaining 10–30% need to be evaluated by AI, causing AI-related costs to drop dramatically.

In short, AI remains expensive on a per-item basis, but the total number of items requiring AI analysis becomes much smaller.

That’s why overall moderation costs decrease.

Building the Future Together

Seeing the synergy among these technologies, Videntifier is actively forming partnerships with providers of AI classification services.

The goal is to deliver combined solutions with both Videntifier fingerprinting and UGC tools with AI-driven content moderation that are more accurate, more cost-effective, and more general-purpose than either technology alone.

This hybrid approach represents the next generation of intelligent content moderation.